Safeguarding Enterprise Software: Protecting Against Security Pitfalls of Generative AI and LLMs

Safeguarding Enterprise Software: Protecting Against Security Pitfalls of Generative AI and LLMs

In today's digital landscape, the transformative power of artificial intelligence (AI) cannot be overstated. Enterprises across various industries are already exploring ways to leverage AI to improve productivity and enhance customer experiences. One area that has witnessed significant advancements is the usage of Generative AI and Large Language Models (LLMs). However, as cybersecurity professionals, it is crucial to understand the potential security pitfalls associated with their implementation. In this blog, we explore how generative AI and LLMs can be utilized in enterprise software, and discuss effective strategies to guard against the inherent risks.

Let's delve into the two primary applications of these technologies in enterprise software.

- Internal Applications: Generative AI and LLMs can significantly enhance productivity by assisting employees in various tasks. For instance, natural language processing (NLP) models can be deployed to automate data analysis, generate reports, and provide real-time insights. Chatbots powered by LLMs can handle routine inquiries, allowing employees to focus on more complex issues. Moreover, these models can aid in code generation, data cleaning, and other repetitive tasks, saving valuable time and effort.

- External Applications: When it comes to customer-facing applications, generative AI and LLMs have the potential to elevate user experiences. Personalized recommendations, virtual assistants, and intelligent search engines are just a few examples of how AI can augment interactions with customers. By leveraging these technologies, enterprises can gain a competitive edge by delivering tailored and intuitive experiences, ultimately leading to increased customer satisfaction and loyalty.

While the benefits of generative AI & LLMs are evident, it is essential to acknowledge the potential risks and liabilities associated with their usage.

- Unintentional Data Sharing: One significant concern is the inadvertent sharing of sensitive data with third parties. For example, when utilizing AI models like OpenAI ChatGPT or Google Bard, it is possible for confidential information to be transmitted unknowingly. This poses a significant liability, as sensitive data breaches can result in regulatory penalties, reputational damage, and loss of customer trust.

- Biased Responses: Another challenge arises from the potential bias within the responses generated by LLMs. While these models are trained on vast amounts of data, bias can inadvertently seep into their output. This can lead to legal ramifications and reputational harm for enterprises, particularly in scenarios where biased responses are discriminatory or offensive.

Several vulnerabilities in Open AI post release such as the ones from the Redis Open Source library resulting in data breach and potential ATO have indicated the criticality of protecting against them.

Different Security Enforcement Points for Open AI Traffic

Protecting Against Risks Traditional web application firewalls (WAFs) and similar tools are often insufficient when it comes to mitigating the risks associated with generative AI and LLMs. Rather than blocking all requests from specific IPs, geographies, or users, enterprises need a more nuanced approach. Companies like Walmart, Amazon, Salesforce, JP Morgan Chase, Verizon and others have already taken measures for restricting their internal usage of Open AI till the controls for sensitive data access are in place. Given that integrations with LLMs and AI are typically API-based, implementing a robust API security solution is vital. This solution should focus on monitoring and protecting individual transactions in real-time, identifying and blocking sensitive or inappropriate content, and ensuring data privacy. We certainly don’t want an internal employee or an external customer to be permanently banned from using AI because they mistakenly sent sensitive data in a prompt once.Traditional edge based security products like Next Generation Firewalls and Proxies can protect sensitive data from users getting into Open AI. They do not have any visibility or control for traffic which go back and forth between applications primarily via internal and partner APIs. Sensitive data traversing modern applications over APIs which are unprotected and a large attack surface have been a top concern for CSOs already, applications leveraging Open AI will only exacerbate the problem.

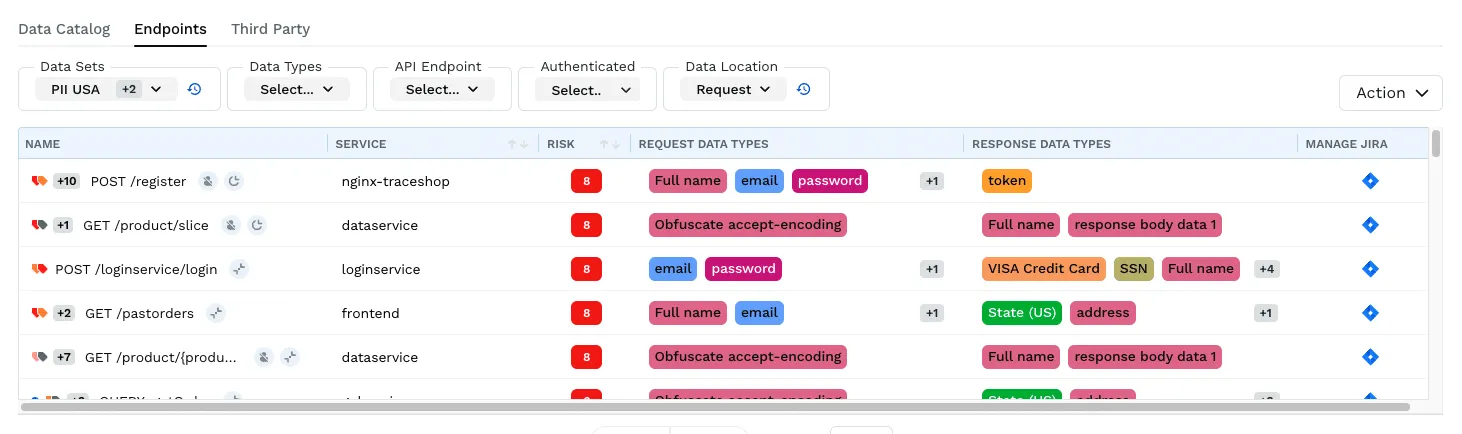

Fine grained security controls for Open AI dependent applications

Among the various API security solutions available, Traceable stands out as the ideal choice for enterprises looking to safeguard against security pitfalls associated with generative AI & LLMs. Here's why:Comprehensive data collection choices: Unlike Traceable, most API security solutions do not offer runtime protection. Those that do collect data only at the edge (LBs, Gateways etc.). That will not work in this case because requests to 3rd parties such as OpenAI ChatGPT are often generated internally. Traceable is the only API security vendor that has the capability to instrument applications written in any language allowing us to capture data from anywhere in the stackIdentifying Sensitive Data in Transit: Traceable comes equipped with pre-built rules that can identify sensitive data in transit. Moreover, it allows customers to customize and bring their own data sets, ensuring comprehensive coverage and protection.

Transaction-Level Blocking: Unlike traditional tools, Traceable provides the granularity required to address the risks associated with AI-powered applications. With its transaction-level blocking capabilities, enterprises can proactively prevent sensitive data leakage and mitigate potential liabilities effectively. API Responses typically carry well formed JSON bodies with key value pairs for sensitive data, with Open AI queries that now change to free form data which aligns with broader Data Loss Prevention (DLP) use cases. Traceable’s protection capabilities have focused on DLP for this reason from the get go and with these new capabilities we take it to the next level to realize API DLP for a broad range of data patterns flowing over APIs.

The Bottom Line

Generative AI and LLMs have ushered in a new era of productivity and customer experience improvements for enterprises. However, it is vital to understand and address the associated security risks. By adopting a comprehensive API security solution like Traceable, enterprises can confidently harness the power of generative AI and LLMs while protecting sensitive data, ensuring compliance, and mitigating potential liabilities. As cybersecurity professionals, it is our responsibility to stay proactive and safeguard the future of enterprise software.

About Traceable

Traceable is the industry’s leading API Security company that helps organizations achieve API protection in a cloud-first, API-driven world. With an API Data Lake at the core of the platform, Traceable is the only intelligent and context-aware solution that powers complete API security – security posture management, threat protection and threat management across the entire Software Development Lifecycle – enabling organizations to minimize risk and maximize the value that APIs bring to their customers. To learn more about how API security can help your business, book a demo with a security expert.

The Inside Trace

Subscribe for expert insights on application security.

.avif)