Traceable's Application Security Platform

The industry's only context-aware Application Security platform that powers complete protection - security posture management, threat protection, and threat management - across the entire SDLC.

Deploy with Confidence

With an extensive range of deployment options, you can discover and secure APIs across your entire digital enterprise.

Self-Managed

On-Prem or Cloud

Cloud

AWS, GCP. Azure or customer datacenter

Saas

Software as a Service

About Our Platform

Application and API Posture Management

Traceable automatically and continuously discovers and builds an inventory of every API in your organization, including internal, private, public or externally exposed, rogue, shadow, partner, and 3rd party APIs.

Traceable continuously discovers and tracks changes to APIs via on-premise, cloud, in-code components, integrations with API management, network traffic endpoints, and even workloads via eBPF.

Discovery and Security Posture Management

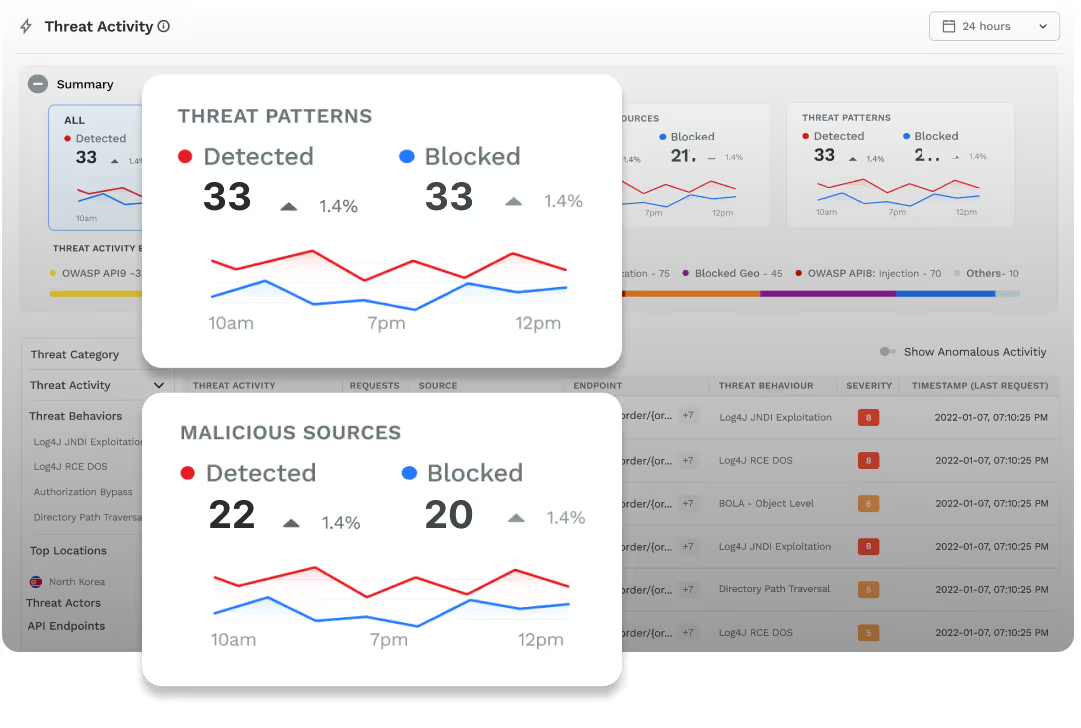

Attack Detection and Threat Hunting

Attack Detection and Threat Hunting

With Traceable, you can identify, assess, and mitigate application and API security threats to your organization, reveal unknown attacks, and visualize user behavior analytics to uncover fraud and abuse. Traceable’s platform provides a comprehensive set of application and API security and data flow analytics that allows your SOC team, incident responders, and threat hunters, as well as red teams and blue teams, to find issues, detect threats, and discover attacks as they occur.

Attack Runtime Protection

Using Traceable’s contextual analysis of your applications and APIs and the complete understanding of the inter-connectivity between the API activity, user activity, data flow, and code execution, Traceable automatically detects and blocks known and unknown attacks, business logic abuse attacks, DDoS, abusive bot activity, as well as sensitive data exfiltration in your production environments.

Attack Protection

Contextual API Security Testing

Contextual API Security Testing

Requiring zero configuration and no dependency on OpenAPI spec files or Postman collections, Traceable empowers your security and Development teams to proactively test for vulnerable APIs using real context from active API traffic.

Learn about how context is key to comprehensive API security

API Security Resources

WHITEPAPER

The Definitive Guide to API Security

SOLUTIONS BRIEF

API Data Lake for Context- Based API Security

WHITEPAPER

API Security Reference Architecture for Zero Trust

See Traceable in Action

Learn how to elevate your API security today.

.avif)